Over the past decade, data scientist has become one of the most attractive titles in tech.

It promises impact, influence, and technical depth.

It suggests working on hard problems, building models, and shaping decisions with data.

Over the past decade, data scientist has become one of the most attractive titles in tech.

It promises impact, influence, and technical depth.

It suggests working on hard problems, building models, and shaping decisions with data.

When people talk about feature engineering, SQL is often treated as a second-class citizen.

You’ll hear things like:

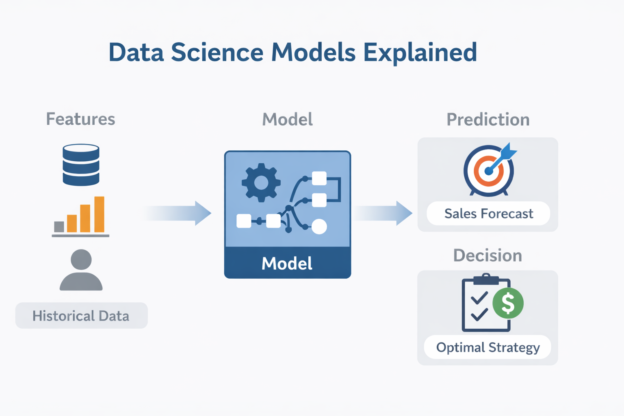

If you’ve worked in BI or analytics long enough, you’ve probably heard people talk about models as if they were something mysterious.

“Once we build a model, we can predict this.”

“The model says this user will churn.”

“We need a better model for this problem.”

If you’ve ever run an A/B test, you’ve probably seen this happen:

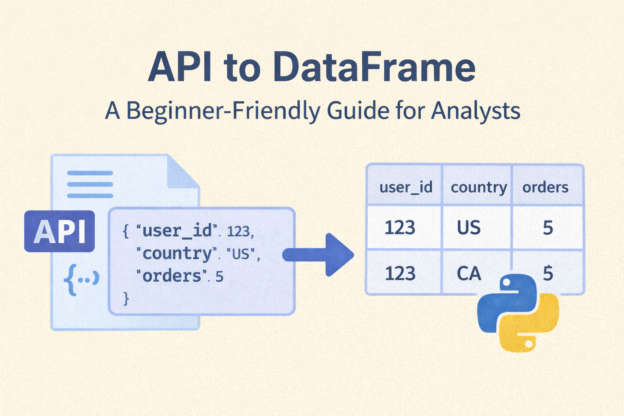

As a data analyst, you’re probably very comfortable working with SQL tables, CSV files, and Excel spreadsheets. But sooner or later, you’ll run into a situation like this:

If you work with data — in analytics, BI, or data engineering — you’ve probably heard the term dbt (pronounced “dee-bee-tee”). It has become one of the most popular tools in the modern data stack because it empowers analysts to build production-grade data pipelines using just SQL.

Continue reading

A/B testing (or split testing) is one of the most powerful tools in an analyst’s toolbox: it allows you to compare two (or more) versions of a web page, feature, or user experience — and determine which version truly performs better.

Continue reading

When building data-driven solutions — whether dashboards, reports, or analytical pipelines — you often focus on selecting, transforming, or visualizing data. But to make all that work, you need a well-structured database behind the scenes. That’s where Data Definition Language (DDL) comes in.

Continue reading

JSON (JavaScript Object Notation) has become the universal language for exchanging data between applications. Whether you’re pulling data from APIs, storing logs, or dealing with semi-structured data in a data lake — JSON is everywhere.

Continue reading

If you work with data long enough, you’ll eventually face the classic “Why does my number look weird?” problem.

Continue reading